Blocking abusers with Anubis

Anubis

I don't know about the rest of you, but I'm over the massive, abusive, scraping that's taken off over the last 6 to 12 months. Bots identifying as bots I can deal with, bots pretending to be human is a much harder problem to solve. It's lead me to block huge swathes of the internet, including blocking several countries completely.

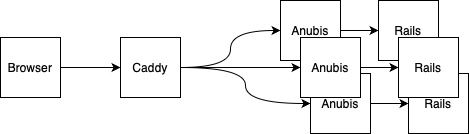

I recently came across this project - Anubis - which aims to slow down the torrent of abusive clients. It does this by placing itself between your load balancer and your app acting as a reverse proxy, and adding an interstitial page. The interstitial page contains some Javascript which requires a proof of work challenge. This proof of work function requires the browser to take some data passed by the Anubis app, add its own data - the 'nonce' - to that data, then calculate the sha256 hash. To pass the challenge, the first X values of the resulting hash must be zero. The browser increments the nonce and calculates the hash until the test is passed. The winning nonce is sent back to Anubis which validates it. If the browser passes the test, they're forwarded on to your app, staying out of the way for 7 days by default.

So, the question is, will this stop abusive traffic? It will certainly stop any client which a) doesn't register as a bot and 2) doesn't support Javascript. I'm not really sure exactly how sophisticated these bots-pretending-to-be-human are but it feels like a reasonable chunk of them must be simple web scrapers masquerading as browsers.

I'm willing to test it out and see if I get a reduction in traffic. So,

Hosting

Anubis doesn't support load balancing back ends, so you'll need to host it right next to your Rails app, not with your load balancer. For Booko, that means 3 instances running in Docker on the same hosts as the Rails servers.

Health Check Headers

Anubis requires the X-Real-IP and X-Forwarded-For headers - even for the health check, so you'll need to make sure your load balancer can send those headers. In Caddy, I do this:

# Health checks

health_uri /up

health_interval 10s

health_timeout 5s

fail_duration 30s

max_fails 3

health_headers {

X-Real-IP < IP ADDRESS OF LOADBALANCER >

X-Forwarded-For < IP ADDRESS OF LOADBALANCER >

}Allow the Health Check

Tell Anubis to allow the health check url:

bots:

- name: health-check

path_regex: ^/up*$

action: ALLOW

- name: internal-traffic

remote_addresses: ["100.0.0.0/8"]

action: ALLOWRunning Anubis in Docker

I use Docker compose to run Anubis. Here's the Docker compose file I'm using:

services:

anubis:

image: ghcr.io/techarohq/anubis:latest

restart: unless-stopped

environment:

BIND: ":8080" # Anubis listens here

TARGET: "http://${HOST_IP}:9292" # Forwards to Rails

DIFFICULTY: "4"

POLICY_FNAME: "/data/cfg/botPolicy.yaml"

ED25519_PRIVATE_KEY_HEX: <generated key in hex>

ports:

- "${HOST_IP}:8080:8080"

volumes:

- "./config:/data/cfg:ro"I'm setting a HOST_IP in the .env file so we can forward traffic to Rails.

Apparently Anubis also exports Prometheus compatible statistics on port 9090, so I'll have a look at integrating that too.

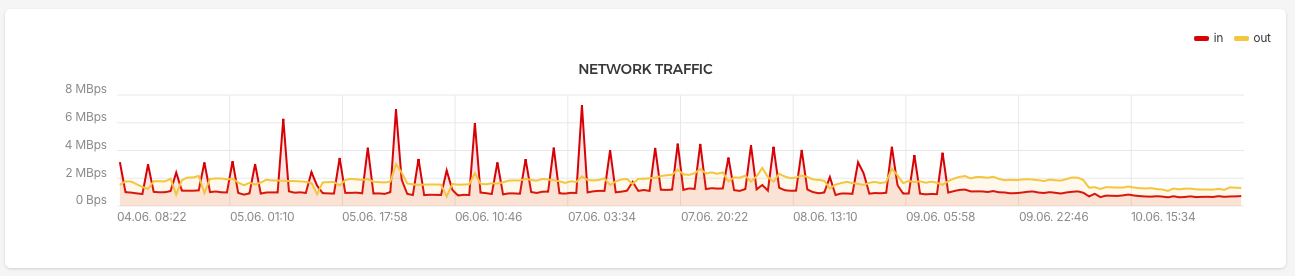

Update:

Network traffic after setting up Anubis. It certainly has an effect.